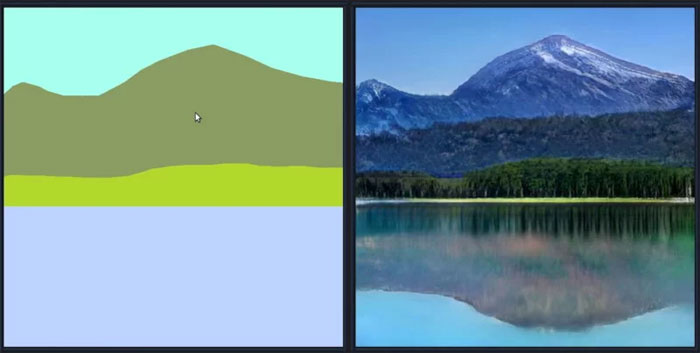

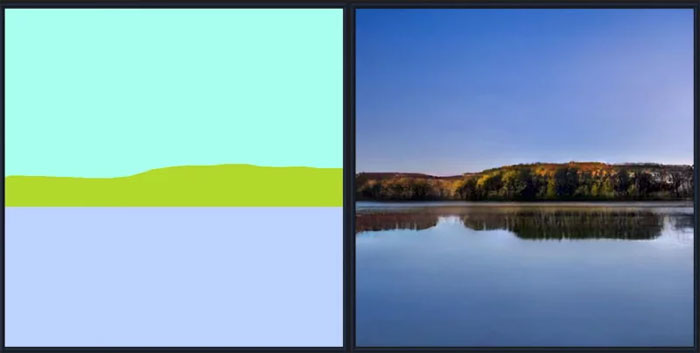

The AI tech company NVIDIA already wowed the world with their computer generated, hyperrealistic portraits of non-existent humans, well now they are back with a new Ai software. Meet AI GauGAN, named for the post-impressionist painter, an interactive app that can turn your “rough doodles into photorealistic masterpieces with breathtaking ease.”

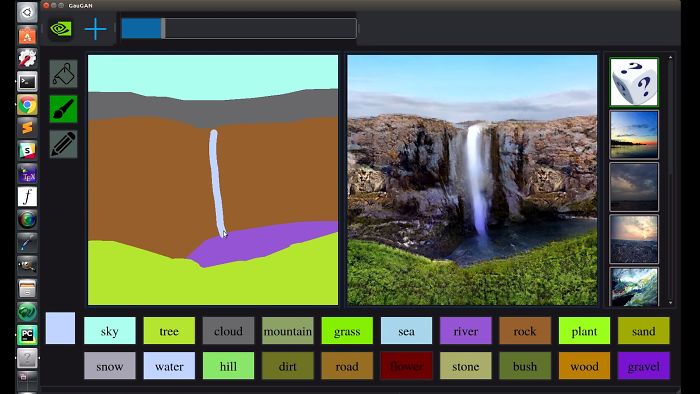

Have you been in an art class, attempting to re-create a photo of a cascading waterfall only to end up with a kindergarten-level rendering? Well, GauGAN can transform your piece in a matter of seconds. “The tool leverages generative adversarial networks, or GANs,” writes NVIDIA, “to convert segmentation maps into lifelike images.” This technology may sound like just a fun art tool but the researchers write it could be a powerful tool in many creative fields.

Bryan Catanzaro, vice president of applied deep learning research at NVIDIA, compared GauGAN’s technology to a “smart paintbrush” that has the ability to fill details with rough segmentation maps. Technology like this, the company writes, could be highly useful for architects, urban planners, landscape designers, and even game developers. “With an AI that understands how the real world looks, these professionals could better prototype ideas and make rapid changes to a synthetic scene.” Catanzaro further explained, “It’s much easier to brainstorm designs with simple sketches, and this technology is able to convert sketches into highly realistic images.”

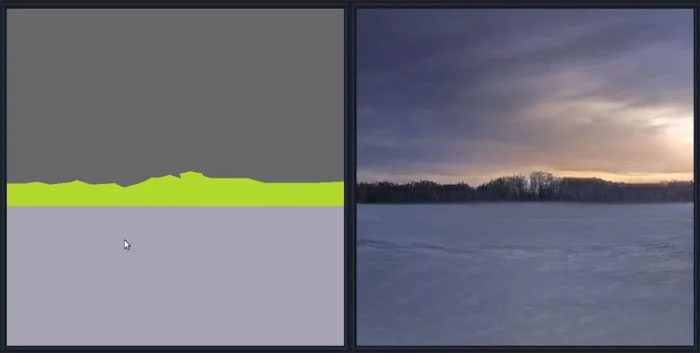

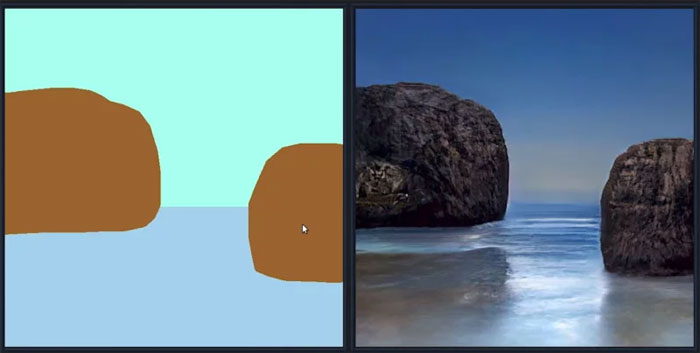

GauGAN is simple technology on the surface. The user draws a segmentation map, then manipulates the scene, labeling segments of the image with words like ‘sky’ or ‘snow.’ The AI is trained with millions of images and is able to then fill in the elements the user has labeled in a hyperrealistic form. If the user changes the label say from ‘snow’ to ‘sea’ the entire image will transform. “It’s like a coloring book picture that describes where a tree is, where the sun is, where the sky is,” Catanzaro said. “And then the neural network is able to fill in all of the detail and texture, and the reflections, shadows and colors, based on what it has learned about real images.”

While this AI lacks a full understanding of the physical world, the GANS still produce life-like results thanks to a two-part system – the generator and the discriminator. The generator first creates the image and then passes it to the discriminator, who has been trained on real images. The discriminator then coaches the generator on how to create a more realistic image, pixel-by-pixel. For example, the discriminator has been trained to know that ponds have reflections and will pass along these detailed instructions along to the generator for a more hyperrealistic result.

In addition to the initial transformation, the human creator also has some creative agency in post-production. The app allows them to add style filters to fit the painter, or even change the photo from daytime to sunset. “This technology is not just stitching together pieces of other images, or cutting and pasting textures,” Catanzaro said. “It’s actually synthesizing new images, very similar to how an artist would draw something.”

While GauGan focuses namely on breathtaking nature landscapes and details such as grassy lands, blue seas and sky that is not all this app is capable of creating. The technology has a neutral network as well, that has the capability of filling in non-natural features such as buildings, roads, and even people.

In June, the research paper behind GauGAN was accepted as an oral presentation at CVPR (Conference on Computer Vision and Pattern Recognition conference), “a premier annual computer vision event.” The distinct honor is only handed out to only 5 percent of over 5,000 submissions.

Watch GauGAN in action here

People couldn’t believe how realistic the images were

from Bored Panda http://bit.ly/2TgcKlO

via Boredpanda